大家好,欢迎来到IT知识分享网。

前言

ShuffleNet是一款旷视研究院提出的针对移动设备的轻量级网络

它是为了进一步改善MobileNet

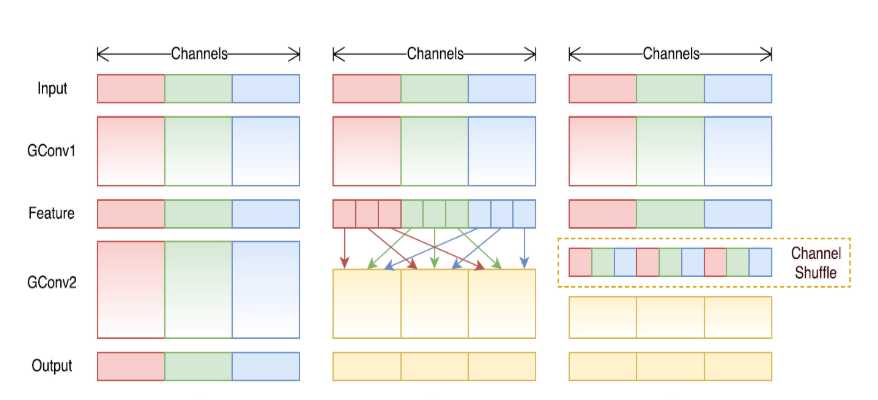

ShuffleNet作者发现,虽然MobileNet作者采用逐通道卷积与逐点卷积(即用1×1卷积块进行卷积)来降低模型参数,但大部分参数都集中在1×1卷积快计算中。所以ShuffleNet改进方法就是将特征图进行分组,让卷积块只对组内的特征图进行计算,分组后,网络需要对不同组的输出进行信息交换,所以又引入了通道重排这一思想,将不同通道的特征图合并在一起

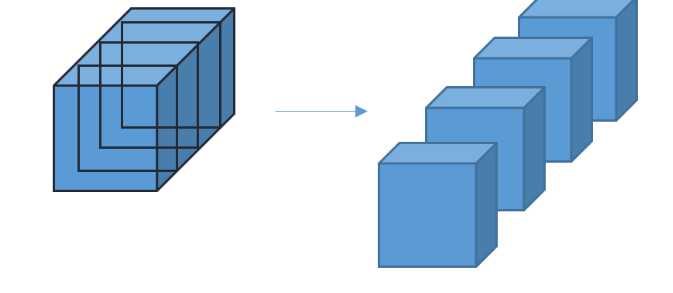

分组卷积

分组卷积实质就是只对组内的特征图进行卷积

首先就是对整个输入进行分组

每一组进行单独的卷积操作

通道重排

我们先来看一个简单的numpy代码

d = np.array([0,1,2,3,4,5,6,7,8])

x = np.reshape(d, (3,3))

x = np.transpose(x, [1,0]) # 转置

x = np.reshape(x, (9,)) # 平铺

输出结果

[0 3 6 1 4 7 2 5 8]

原本我们的输入是从0-8

假设我们每三个元素看成是一个通道内的元素

通道1元素有0, 1, 2

通道2元素有3, 4, 5

通道3元素有6, 7, 8

经过混合后0, 1, 2分别进入到通道1, 2, 3

同理3,4,5 6,7,8也是分别进入到三个通道

这样我们巧妙的利用矩阵的转置进行了通道的重排

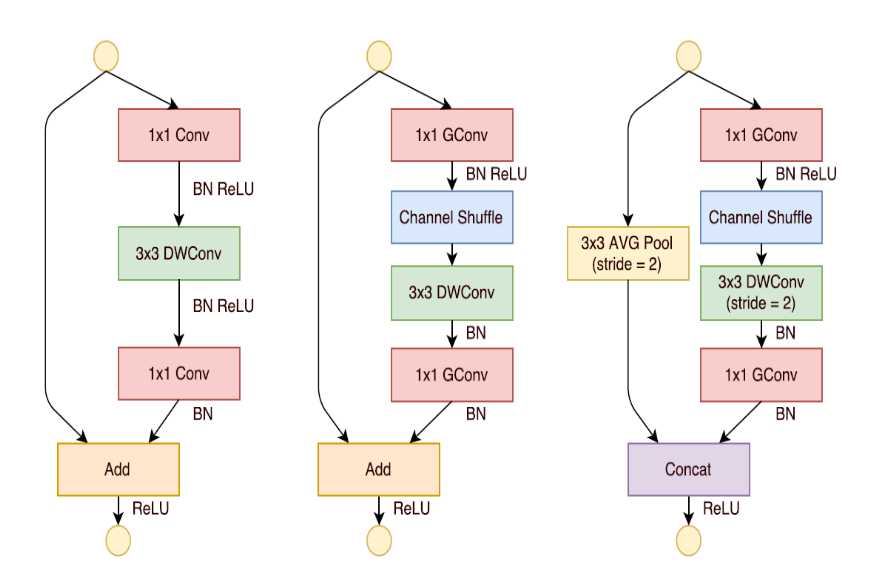

ShuffleNetUnit

左边是Mobilenet作者提出的深度可分离卷积结构块,其中也是受到Resnet的启发,进行跳跃连接

中间则是ShuffleNet的主要Block

右边是ShuffleNet的降采样块

当Strides步长为2的时候进行降采样

具体实现代码

本博文代码来自https://blog.csdn.net/zjn295771349/article/details/89704086

我在代码基础上加了一些自己的注释

import numpy as np

from keras.callbacks import LearningRateScheduler

from keras.models import Model

from keras.layers import Input, Conv2D, Dropout, Dense, GlobalAveragePooling2D, Concatenate, AveragePooling2D

from keras.layers import Activation, BatchNormalization, add, Reshape, ReLU, DepthwiseConv2D, MaxPooling2D, Lambda

from keras.utils.vis_utils import plot_model

from keras import backend as K

from keras.optimizers import SGD

def _group_conv(x, filters, kernel, stride, groups):

# 根据keras格式判断通道在哪一个维度

channel_axis = 1 if K.image_data_format() == 'channels_first' else -1

in_channels = K.int_shape(x)[channel_axis] # 获取输入的维数

# 每一组的输入通道数

nb_ig = in_channels // groups

# 每一组的输出通道数

nb_og = filters // groups

gc_list = []

# 假设能整整的分配到组里

assert filters % groups == 0

for i in range(groups):

# 当通道维数在最后一维

if channel_axis == -1:

# Lambda是将一个lambda表达式封装为一个layer对象

x_group = Lambda(lambda z: z[:, :, :, i*nb_ig: (i+1)*nb_ig])(x)

else:

x_group = Lambda(lambda z: z[:, i*nb_ig: (i+1)*nb_ig, :, :])(x)

gc_list.append(Conv2D(filters=nb_og, kernel_size=kernel, strides=stride,

padding='SAME', use_bias=False)(x_group))

# 最后在通道维数进行连结

return Concatenate(axis=channel_axis)(gc_list)

def _channel_shuffle(x, groups):

"""

通道混洗层,这里借助矩阵的reshape和转置达到混洗

示例代码如下:

d = np.array([0,1,2,3,4,5,6,7,8])

x = np.reshape(d, (3,3))

x = np.transpose(x, [1,0]) # 转置

x = np.reshape(x, (9,)) # 平铺

[0 1 2 3 4 5 6 7 8] --> [0 3 6 1 4 7 2 5 8]

:param x:输入张量

:param groups:分组数

:return:

"""

# 当通道数放在最后一维的时候

if K.image_data_format() == "channels_last":

# 获取长,宽,通道数

height, width, in_channels = K.int_shape(x)[1:]

channels_per_group = in_channels // groups

pre_shape = [-1, height, width, groups, channels_per_group]

dim = (0, 1, 2, 4, 3)

# 相当于又回到了in_channels这个通道进行平铺

later_shape = [-1, height, width, in_channels]

else:

# 当通道数放在第一维

in_channels, height, width = K.int_shape(x)[1:]

channels_per_group = in_channels // groups

pre_shape = [-1, groups, channels_per_group, height, width]

dim = (0, 2, 1, 3, 4)

# 给维度增加-1能让np根据后面的维度自动推理形状

later_shape = [-1, in_channels, height, width]

x = Lambda(lambda z: K.reshape(z, pre_shape))(x)

x = Lambda(lambda z: K.permute_dimensions(z, dim))(x) # 给定模式进行重排

x = Lambda(lambda z: K.reshape(z, later_shape))(x)

return x

def _shufflenet_unit(inputs, filters, kernel, stride, groups, stage, bottleneck_ratio=0.25):

"""

ShuffleNet unit

# Arguments

inputs: Tensor, input tensor of with `channels_last` or 'channels_first' data format

filters: Integer, number of output channels

kernel: An integer or tuple/list of 2 integers, specifying the

width and height of the 2D convolution window.

strides: An integer or tuple/list of 2 integers,

specifying the strides of the convolution along the width and height.

Can be a single integer to specify the same value for

all spatial dimensions.

groups: Integer, number of groups per channel

stage: Integer, stage number of ShuffleNet

bottleneck_channels: Float, bottleneck ratio implies the ratio of bottleneck channels to output channels

# Returns

Output tensor

# Note

For Stage 2, we(authors of shufflenet) do not apply group convolution on the first pointwise layer

because the number of input channels is relatively small.

"""

channel_axis = 1 if K.image_data_format() == 'channels_first' else -1

in_channels = K.int_shape(inputs)[channel_axis]

bottleneck_channels = int(filters*bottleneck_ratio)

if stage == 2:

x = Conv2D(filters=bottleneck_channels, kernel_size=kernel, strides=1, padding='SAME',

use_bias=False)(inputs)

else:

x = _group_conv(inputs, bottleneck_channels, (1, 1), 1, groups)

x = BatchNormalization(axis=channel_axis)(x)

x = ReLU()(x)

x = _channel_shuffle(x, groups)

x = DepthwiseConv2D(kernel_size=kernel, strides=stride, depth_multiplier=1, padding='SAME',

use_bias=False)(x)

x = BatchNormalization(axis=channel_axis)(x)

if stride == 2:

# 当步长为2,shuffleNet模块转变为一个降采样模块

x = _group_conv(x, filters-in_channels, (1, 1), 1, groups)

# 因为这个降采样模块会将输入input,给concat到最后的输出

# 所以这里的filters数目需要减掉输入的通道数

x = BatchNormalization(axis=channel_axis)(x)

avg = AveragePooling2D(pool_size=(3, 3), strides=2, padding='SAME')(inputs)

x = Concatenate(axis=channel_axis)([avg, x])

else:

x = _group_conv(x, filters, (1, 1), 1, groups)

x = BatchNormalization(axis=channel_axis)(x)

x = add([x, inputs])

return x

def _stage(x, filters, kernel, groups, repeat, stage):

x = _shufflenet_unit(x, filters, kernel, 2, groups, stage)

for i in range(1, repeat):

x = _shufflenet_unit(x, filters, kernel, 1, groups, stage)

return x

def shuffleNet(input_shape, classes):

inputs = Input(shape=input_shape)

x = Conv2D(24, (3, 3), strides=2, padding='SAME', use_bias=True, activation='elu')(inputs)

x = MaxPooling2D(pool_size=(3, 3), strides=2, padding='SAME')(x)

x = _stage(x, filters=384, kernel=(3, 3), groups=8, repeat=4, stage=2)

x = _stage(x, filters=768, kernel=(3, 3), groups=8, repeat=8, stage=3)

x = _stage(x, filters=1536, kernel=(3, 3), groups=8, repeat=4, stage=4)

x = GlobalAveragePooling2D()(x)

x = Dense(classes)(x)

predicts = Activation('softmax')(x)

model = Model(inputs, predicts)

return model

if __name__ == '__main__':

model = shuffleNet((224, 224, 3), 1000)

model.summary()

plot_model(model, to_file='./shuffleNet.png')

免责声明:本站所有文章内容,图片,视频等均是来源于用户投稿和互联网及文摘转载整编而成,不代表本站观点,不承担相关法律责任。其著作权各归其原作者或其出版社所有。如发现本站有涉嫌抄袭侵权/违法违规的内容,侵犯到您的权益,请在线联系站长,一经查实,本站将立刻删除。 本文来自网络,若有侵权,请联系删除,如若转载,请注明出处:https://yundeesoft.com/22273.html