大家好,欢迎来到IT知识分享网。

图像拼接、融合是全景拼接的基础操作,opencv库提供了stitch方法,该方法相当完备,就是速度有点慢。

我也实现了一个类似的方法,其流程为:特征提取、特征匹配、透视变换、掩膜生成、羽化融合。

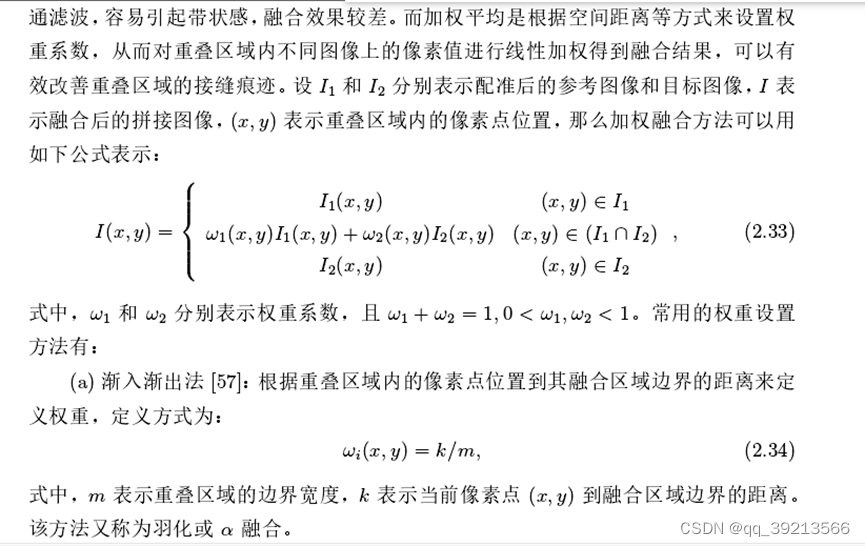

按羽化算法,如下所示,两图交集区域是图像融合的区域,某点距离融合边界(属于图像a)越远,图像a在此点的融合权重越小。

因此,构造权重掩膜需要满足一个约束:

按距离分配融合权重,在图像a融合边界上的点,图像a的融合权重为1,图像b为0。

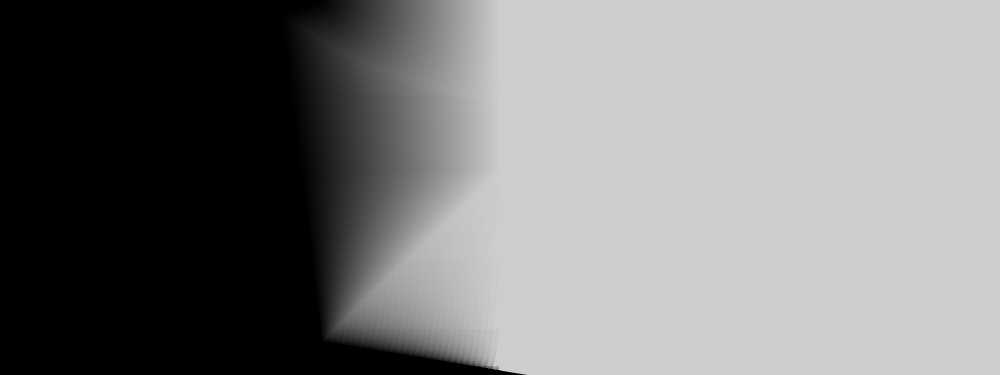

import cv2 import numpy as np def image_rotate(src, rotate=0): h,w,c = src.shape cos_val = np.cos(np.deg2rad(rotate)) sin_val = np.sin(np.deg2rad(rotate)) M = np.float32([[cos_val, sin_val, 0], [-sin_val, cos_val, 0]]) img = cv2.warpAffine(src, M, (w,h)) return img # Open the image files. img1_color = image_rotate(cv2.imread("picb.png"),0) # Image to be aligned. img2_color = image_rotate(cv2.imread("pica.png"),5) # Reference image. # Convert to grayscale. img1 = cv2.cvtColor(img1_color, cv2.COLOR_BGR2GRAY) img2 = cv2.cvtColor(img2_color, cv2.COLOR_BGR2GRAY) height, width = img2.shape # Create ORB detector with 5000 features. orb_detector = cv2.ORB_create(5000) # Find keypoints and descriptors. # The first arg is the image, second arg is the mask # (which is not required in this case). kp1, d1 = orb_detector.detectAndCompute(img1, None) kp2, d2 = orb_detector.detectAndCompute(img2, None) # Match features between the two images. # We create a Brute Force matcher with # Hamming distance as measurement mode. matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck = True) # Match the two sets of descriptors. matches = list(matcher.match(d1, d2)) # Sort matches on the basis of their Hamming distance. matches.sort(key = lambda x: x.distance) # Take the top 90 % matches forward. matches = matches[:int(len(matches)*0.9)] no_of_matches = len(matches) # Define empty matrices of shape no_of_matches * 2. p1 = np.zeros((no_of_matches, 2)) p2 = np.zeros((no_of_matches, 2)) for i in range(len(matches)): p1[i, :] = kp1[matches[i].queryIdx].pt p2[i, :] = kp2[matches[i].trainIdx].pt # Find the homography matrix. homography, mask = cv2.findHomography(p1, p2, cv2.RANSAC) # Use this matrix to transform the # colored image wrt the reference image. #image fusion #create mask for fusion img1_mask = np.zeros(img1.shape, np.uint8) img1_mask.fill(255) img1_mask = cv2.warpPerspective(img1_mask, homography, (width*2, height)) img2_mask=np.zeros(img2.shape, np.uint8) img2_mask.fill(255) img2_mask=cv2.copyMakeBorder(img2_mask,0,0,0,img2.shape[1], cv2.BORDER_CONSTANT,value=0) img_and = cv2.bitwise_and(img1_mask, img2_mask) img_xor=cv2.bitwise_xor(img1_mask, img_and) #distance transformer img1_distance=cv2.distanceTransform(img1_mask, cv2.DIST_L2, 0) t,img1_distance=cv2.threshold (img1_distance,255,255,cv2.THRESH_TRUNC) img1_distance=np.uint8(img1_distance) dst = cv2.bitwise_and(img1_distance, img_and) #satisfied to constraints img2_g=cv2.copyMakeBorder(img2,0,0,0,img2.shape[1], cv2.BORDER_CONSTANT,value=0) img1_g = cv2.warpPerspective(img1, homography, (width*2, height)) h=np.ones(img1_g.shape) max=dst.max() for i in range(dst.shape[0]): temp_max=dst[i,:].max() dst[i, :]=dst[i,:]/temp_max*max dst=dst/max img_xor=img_xor/img_xor.max() dst=dst+img_xor cv2.imwrite('mask.jpg', dst*max) fusion_img=(h-dst)*img2_g+dst*img1_g cv2.imwrite('stitch and fusion.jpg', fusion_img)

构造融合掩膜(符合羽化算法约束)

免责声明:本站所有文章内容,图片,视频等均是来源于用户投稿和互联网及文摘转载整编而成,不代表本站观点,不承担相关法律责任。其著作权各归其原作者或其出版社所有。如发现本站有涉嫌抄袭侵权/违法违规的内容,侵犯到您的权益,请在线联系站长,一经查实,本站将立刻删除。 本文来自网络,若有侵权,请联系删除,如若转载,请注明出处:https://yundeesoft.com/29367.html